3.解析模块的使用

推荐使用pyquery

Beautiful Soup

安装

pip install bs4或者

pip install beautifulsoup4用途

BeautifulSoup 就是 Python 的一个 HTML 或 XML 的解析库,我们可以用它来方便地从网页中提取数据

解析器比较

BeautifulSoup 支持的解析器及优缺点

Python标准库

BeautifulSoup(markup, "html.parser")

Python的内置标准库、执行速度适中 、文档容错能力强

Python 2.7.3 or 3.2.2)前的版本中文容错能力差

LXML HTML 解析器

BeautifulSoup(markup, "lxml")

速度快、文档容错能力强

需要安装C语言库

LXML XML 解析器

BeautifulSoup(markup, "xml")

速度快、唯一支持XML的解析器

需要安装C语言库

html5lib

BeautifulSoup(markup, "html5lib")

最好的容错性、以浏览器的方式解析文档、生成 HTML5 格式的文档

速度慢、不依赖外部扩展

文档

用法

简单用例

样本

导入模块

解析数据

运行结果:

节点选择器

选择元素

实例:

运行结果:

提取信息

获取名称

可以利用 name 属性来获取节点的名称

实例:选择title,调用name属性得到节点的名称

运行结果:

获取属性

调用 attrs 获取所有属性

运行结果:

获取内容

利用 string 属性获取节点元素包含的文本内容

运行结果:

嵌套选择

实例:获取head节点内部的title节点

运行结果:

关联选择

子节点和子孙节点

获取直接子节点可以调用 contents 属性

实例:获取body节点下子节点p

运行结果:返回结果是列表形式

可以调用 children 属性,得到相应的结果:

运行结果:

要得到所有的子孙节点的话可以调用 descendants 属性

运行结果:

父节点和祖先节点

要获取某个节点元素的父节点,可以调用 parent 属性:

实例:获取节点a的父节点p下的内容

运行结果:

要想获取所有的祖先节点,可以调用 parents 属性

运行结果:

兄弟节点

next_sibling :获取节点的下一个兄弟节点

previous_sibling:获取节点上一个兄弟节点

next_siblings :返回所有前面兄弟节点的生成器

previous_siblings :返回所有后面的兄弟节点的生成器

实例:

运行结果:

提取信息

获取一些信息,比如文本、属性等等

运行结果:

方法选择器

常用查询方法:find_all()、find()

find_all()

查询所有符合条件的元素

语法:

name

根据节点名来查询元素

运行结果:返回结果类型为:bs4.element.Tag

获取ul下的li节点以及li下的文本内容

运行结果:

attrs

根据属性来进行查询

运行结果:

对于常用的属性比如id,class,可以不用attrs传递

运行结果:

text

text 参数可以用来匹配节点的文本,传入的形式可以是字符串,可以是正则表达式对象

运行结果:

find()

find() 方法返回的是单个元素,即第一个匹配的元素,而 find_all() 返回的是所有匹配的元素组成的列表

返回结果:返回类型为<class 'bs4.element.Tag'>

其他查询方法

find_parents() find_parent()

find_parents() 返回所有祖先节点,find_parent() 返回直接父节点。

find_next_siblings() find_next_sibling()

find_next_siblings() 返回后面所有兄弟节点,find_next_sibling() 返回后面第一个兄弟节点。

find_previous_siblings() find_previous_sibling()

find_previous_siblings() 返回前面所有兄弟节点,find_previous_sibling() 返回前面第一个兄弟节点。

find_all_next() find_next()

find_all_next() 返回节点后所有符合条件的节点, find_next() 返回第一个符合条件的节点。

find_all_previous() 和 find_previous()

find_all_previous() 返回节点后所有符合条件的节点, find_previous() 返回第一个符合条件的节点

CSS选择器

相关链接:http://www.w3school.com.cn/cssref/css_selectors.asp。

使用 CSS 选择器,只需要调用 select() 方法,传入相应的 CSS 选择器

实例:

运行结果:

嵌套选择

实例:select() 方法同样支持嵌套选择,例如我们先选择所有 ul 节点,再遍历每个 ul 节点选择其 li 节点

运行结果:

获取属性

获取属性还是可以用上面的方法获取

运行结果:

获取文本

获取文本可以用string 属性,还有一种方法那就是 get_text(),同样可以获取文本值。

运行结果:

细节

推荐使用 LXML 解析库,必要时使用 html.parser

节点选择筛选功能弱但是速度快

建议使用 find()、find_all() 查询匹配单个结果或者多个结果

如果对 CSS 选择器熟悉的话可以使用 select() 选择法

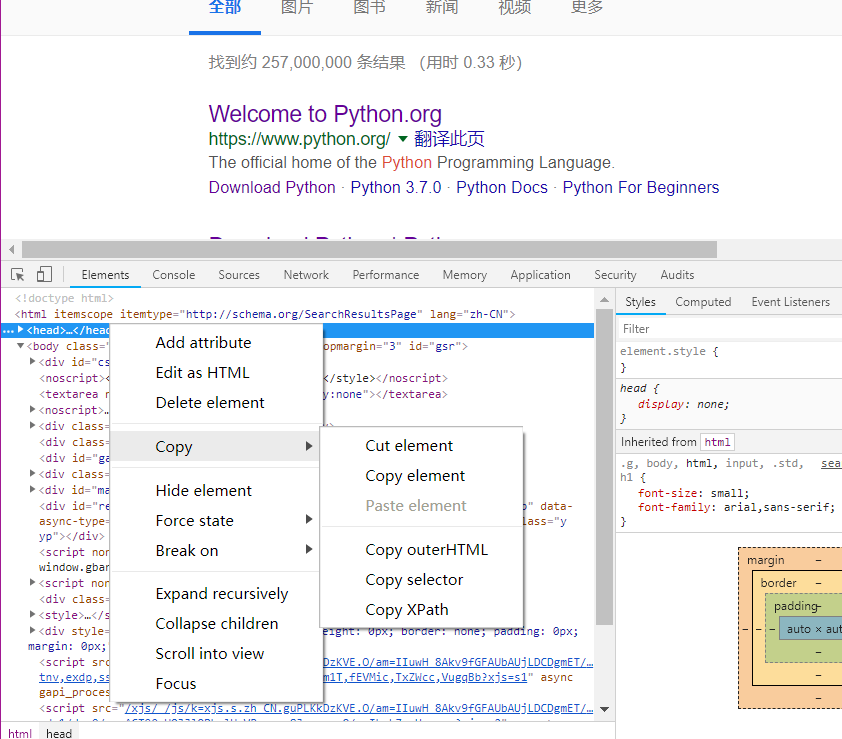

如何匹配规则不是熟练,而且想快速获取,可以如下操作:

右键

Pyquery

安装

用途

PyQuery允许像JQuery一样对快速对xml(lxml)文档进行元素查询、元素操作的python库。如果熟悉JQuery的api,那么掌握PyQuery是一件十分容易的事情,因为PyQuery的api和JQuery的api基本上一致。PyQuery是一款基于lxml的库,而lxml能够快速处理xml和html文档。

文档

用法

初始化

PyQuery 初始化的时候也需要传入 HTML 数据源来初始化一个操作对象,它的初始化方式有多种,比如直接传入字符串,传入 URL,传文件名

字符串初始化

运行结果:

url初始化

指定传入的参数为url

运行结果:

但是不建议这样做,应该配合请求库一起使用

文件初始化

传入参数指定为 filename

CSS选择器

通过css选择器,筛选出所需的数据

运行结果:

.class

.intro

选择 class="intro" 的所有元素。

#id

#firstname

选择 id="firstname" 的所有元素。

*

*

选择所有元素。

element

p

选择所有

元素。

element,element

div,p

选择所有元素和所有

元素。

elementelement

div p

选择元素内部的所有

元素。

element>element

div>p

选择父元素为元素的所有

元素。

element+element

div+p

选择紧接在元素之后的所有

元素。

[attribute]

[target]

选择带有 target 属性所有元素。

[attribute=value]

[target=_blank]

选择 target="_blank" 的所有元素。

[attribute~=value]

[title~=flower]

选择 title 属性包含单词 "flower" 的所有元素。

[attribute|=value]

[lang|=en]

选择 lang 属性值以 "en" 开头的所有元素。

:link

a:link

选择所有未被访问的链接。

:visited

a:visited

选择所有已被访问的链接。

:active

a:active

选择活动链接。

:hover

a:hover

选择鼠标指针位于其上的链接。

:focus

input:focus

选择获得焦点的 input 元素。

:first-letter

p:first-letter

选择每个

元素的首字母。

:first-line

p:first-line

选择每个

元素的首行。

:first-child

p:first-child

选择属于父元素的第一个子元素的每个

元素。

:before

p:before

在每个

元素的内容之前插入内容。

:after

p:after

在每个

元素的内容之后插入内容。

:lang(language)

p:lang(it)

选择带有以 "it" 开头的 lang 属性值的每个

元素。

element1~element2

p~ul

选择前面有

元素的每个

元素。

[attribute^=value]

a[src^="https"]

选择其 src 属性值以 "https" 开头的每个 元素。

[attribute$=value]

a[src$=".pdf"]

选择其 src 属性以 ".pdf" 结尾的所有 元素。

[attribute*=value]

a[src*="abc"]

选择其 src 属性中包含 "abc" 子串的每个 元素。

:first-of-type

p:first-of-type

选择属于其父元素的首个

元素的每个

元素。

:last-of-type

p:last-of-type

选择属于其父元素的最后

元素的每个

元素。

:only-of-type

p:only-of-type

选择属于其父元素唯一的

元素的每个

元素。

:only-child

p:only-child

选择属于其父元素的唯一子元素的每个

元素。

:nth-child(n)

p:nth-child(2)

选择属于其父元素的第二个子元素的每个

元素。

:nth-last-child(n)

p:nth-last-child(2)

同上,从最后一个子元素开始计数。

:nth-of-type(n)

p:nth-of-type(2)

选择属于其父元素第二个

元素的每个

元素。

:nth-last-of-type(n)

p:nth-last-of-type(2)

同上,但是从最后一个子元素开始计数。

:last-child

p:last-child

选择属于其父元素最后一个子元素每个

元素。

:root

:root

选择文档的根元素。

:empty

p:empty

选择没有子元素的每个

元素(包括文本节点)。

:target

#news:target

选择当前活动的 #news 元素。

:enabled

input:enabled

选择每个启用的 元素。

:disabled

input:disabled

选择每个禁用的 元素

:checked

input:checked

选择每个被选中的 元素。

:not(selector)

:not(p)

选择非

元素的每个元素。

::selection

::selection

选择被用户选取的元素部分。

查找节点

pyquery的常用的查询函数,这些函数和 jQuery 中的函数用法完全相同

子节点

查找子节点需要用到 find() 方法,传入的参数是 CSS 选择器

运行结果:

子孙节点

find() 的查找范围是节点的所有子孙节点,而如果只想查找子节点,那可以用 children() 方法

运行结果:

实例:筛选出子节点class属性值为active

运行结果:

父节点

可以用 parent() 方法来获取某个节点的父节点

实例:返回class属性值list当前节点的父节点下的内容

运行结果:

祖先节点

parents() 方法会返回所有的祖先节点

结果会有两个节点

运行结果:

筛选出指定父节点

实例:筛选出属性值为wrap的父节点

运行结果:

兄弟节点

要获取兄弟节点可以使用 siblings() 方法

运行结果:

筛选某个指定兄弟节点

运行结果:

遍历

对于多个节点的结果,需要遍历来获取了

实例:把每一个 li 节点进行遍历,,需要调用 items() 方法

运行结果:

获取信息

获取属性

获取文本

获取属性

使用attr() 方法获取属性

有两种获取属性方法:

运行结果:

当返回结果包含多个节点时,调用 attr() 方法只会得到第一个节点的属性

运行结果:

获取文本

调用 text() 方法,就可以获取其内部的文本信息了,它会忽略掉节点内部包含的所有 HTML,只返回纯文字内容

运行结果:

获取这个节点内部的 HTML 文本,就可以用 html() 方法

运行结果:

在多个节点的情况下,html() 方法返回第一个 li 节点的内部 HTML 文本,而 text() 返回所有的 li 节点内部纯文本

节点操作

PyQuery 提供了一系列方法来对节点进行动态修改操作

add_class、remove_class

添加、删除类属性

返回结果:

attr、text、html

attr() 方法如果只传入第一个参数属性名,则是获取这个属性值,如果传入第二个参数,可以用来修改属性值,text() 和 html() 方法如果不传参数是获取节点内纯文本和 HTML 文本,如果传入参数则是进行赋值

返回结果:

remove

移除

实例:

运行结果:

例子:移除p节点

运行结果:

另外其实还有很多节点操作的方法,比如 append()、empty()、prepend() 等方法,这些函数和 jQuery 的用法是完全一致的。

伪类选择器

例如选择第一个节点、最后一个节点、奇偶数节点、包含某一文本的节点等等

实例:

运行结果:

最后更新于

这有帮助吗?